TL;DR: SSE vs Streamable HTTP

What Changed:

- Old (SSE): Required permanent connections + separate

/sseendpoint → connections dropped = lost everything - New (Streamable HTTP): Single

/messageendpoint + optional session IDs → connections drop = automatic recovery

Key Improvements:

- Reliability: Session recovery vs complete restart on disconnect

- Efficiency: On-demand connections vs always-on connections

- Compatibility: Works with all web infrastructure vs SSE-unfriendly proxies/firewalls

–> Also if you want to know how to secure MCP Server with NGINX + Supergateway + Render, see our Secure MCP article on dev.to

Introduction

Though the Model Context Protocol (MCP) has been around for a couple of months now, its relevance continues to grow as organizations recognize its transformative potential. This breakthrough approach transforms standalone language models into dynamic agentic systems capable of accessing live databases, interfacing with external services, handling diverse file formats, and orchestrating sophisticated workflows across multiple platforms.

MCP addresses the critical gap by providing a standardized framework that connects AI models with the outside world. MCP is an open standard developed by Anthropic to enable simple and standardized integration between AI models and external tools. It functions as a universal connector that allows large language models (LLMs) to dynamically interact with APIs, databases, and business applications. Originally, MCP was developed to improve Claude’s interaction with external systems. Anthropic decided to release MCP as open source in early 2024 to promote industry-wide adoption. By making MCP publicly accessible, they aimed to create a standardized framework for communication between AI and tools that reduces dependency on proprietary integrations and enables greater modularity and interoperability between AI applications.

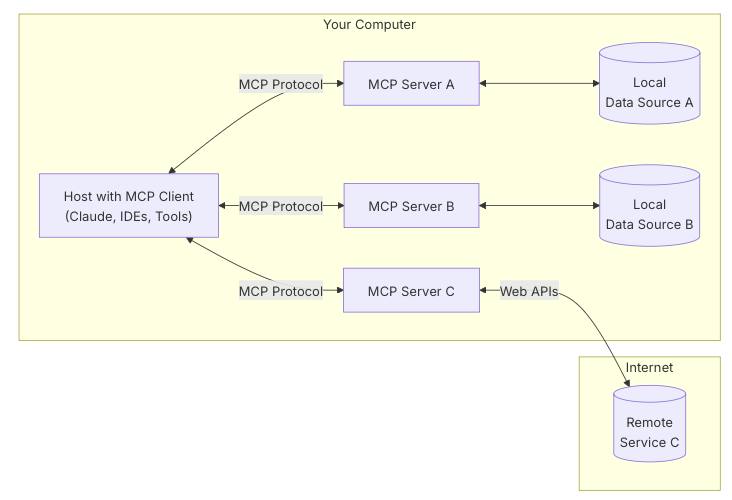

MCP is based on a client-server architecture:

- MCP Clients (e.g., Claude Desktop) request information and execute tasks

- MCP Servers provide access to external tools and data sources

- Host Applications use MCP to communicate between models and tools

-

The ecosystem is already flourishing, with a dedicated Docker Hub repository at https://hub.docker.com/u/mcp showcasing ready-to-use MCP servers and tools. This growing collection of containerized MCP implementations demonstrates the protocol’s rapid adoption and makes it easier than ever for developers to experiment with and deploy MCP-enabled solutions.

What is the Model Context Protocol?

MCP is more than just a protocol – it’s the foundation for building truly intelligent, interactive AI systems. Think of MCP as a universal bridge that allows AI models to seamlessly connect with the digital world around them. MCP serves as an abstraction layer that shields AI models from the complexities of direct API integration. Instead of requiring custom code for each service an AI might use, MCP provides a unified interface that works consistently across different tools and platforms. This standardization is crucial for building scalable, maintainable AI systems that can evolve alongside rapidly changing technology landscapes.

Source: Anthropic Documentation

The protocol supports various communication methods (primarily STDIO and Streamable HTTP) to accommodate different deployment scenarios while maintaining consistent semantics, making it versatile enough for everything from personal development to enterprise-scale AI applications.

The Problem MCP Solves:

- Traditional AI models are isolated – they can’t interact with external systems

- Each tool integration requires custom, complex code

- No standardization across different AI platforms and services

- Building agentic AI systems becomes fragmented and difficult to maintain

MCP in Action: Real-World Applications

MCP enables a wide range of practical applications across different domains. The table below highlights some key real-world implementations:

| Application Domain | Capabilities | Examples |

|---|---|---|

| Agentic AI Systems | Access live data | Connecting to databases and APIs in real-time |

| Control external services | Managing email, calendars, and cloud platforms | |

| Process diverse files | Handling multiple document formats and data types | |

| Integrate with dev tools | Code analysis, testing, and deployment automation | |

| Orchestrate workflows | Managing multi-stage processes across systems | |

| Enterprise AI Automation | Customer Support | AI agents accessing CRM systems, knowledge bases, and ticketing |

| Data Analysis | Generating insights by connecting to data sources and analytics | |

| DevOps Automation | Monitoring systems, analyzing logs, managing infrastructure | |

| Content Management | Creating and distributing content across multiple platforms | |

| Personal AI Assistants | Smart Home Integration | Controlling IoT devices and automating daily routines |

| Personal Productivity | Managing emails, calendars, documents, and projects | |

| Research Assistance | Gathering information from multiple sources and generating reports |

MCP Communication Methods: STDIO vs Streamable HTTP

STDIO: The Local Approach

STDIO (Standard Input/Output) communication works much like running a command-line program on your computer. When your AI needs to use a tool:

- Tool Execution: The MCP client runs a local script or program

- Data Exchange: Input data is sent via standard input (stdin)

- Result Capture: Output is received via standard output (stdout)

- Response Integration: Results are sent back to the AI for processing

This approach is ideal for local development, personal tools, and situations where you want to leverage existing command-line utilities.

Streamable HTTP: The Network Approach

Streamable HTTP represents a significant evolution in MCP communication, replacing the original Server-Sent Events (SSE) approach with a more flexible and reliable protocol. This new transport layer addresses the limitations of the previous HTTP+SSE mechanism while maintaining compatibility with existing web infrastructure.

How Streamable HTTP Works:

- Unified Endpoint: All communication happens through a single

/messageendpoint - Session Management: Optional session IDs enable state management and recovery

- Flexible Response Types: Servers can choose between standard HTTP responses or streaming responses

- On-Demand Streaming: Resources are allocated only when needed, avoiding unnecessary long-lived connections

- Connection Recovery: If connections drop, clients can reconnect using session IDs to resume operations

Key Improvements over SSE:

- Better Reliability: Support for reconnection and session recovery

- Resource Efficiency: No requirement for permanent long-lived connections

- Infrastructure Compatibility: Works seamlessly with CDNs, load balancers, and API gateways

- Flexible Deployment: Suitable for everything from serverless functions to complex AI applications

Conclusion

The Model Context Protocol represents a fundamental shift in how we build AI systems – from isolated language models to truly interactive, agentic AI that can engage with the world around it. While the choice between STDIO and Streamable HTTP communication methods is important, the bigger picture is MCP’s role in enabling the next generation of AI applications.

Comments are closed.